Programming Language Battles

A not-so-brief story about the evolution of programming languages#

This article was saved from a long post I made on Telegram, around 2018! It was found when I was trying to configure Helix on Windows, but that’s another story :-D.

I made some corrections to the original text and added some links, but remember that the text came from a chat and is more informal than a historical text. Visit the links for a broader view of each subject.

Battles here in the sense of comparisons about which is the best language to do something, which is the most productive, fastest, most used, most modern, etc. These battles about programming languages are frequent in groups and mailing lists. Many ignore that everything turns into instructions that the machine is capable of executing; what changes are the methods to achieve this result and the final performance.

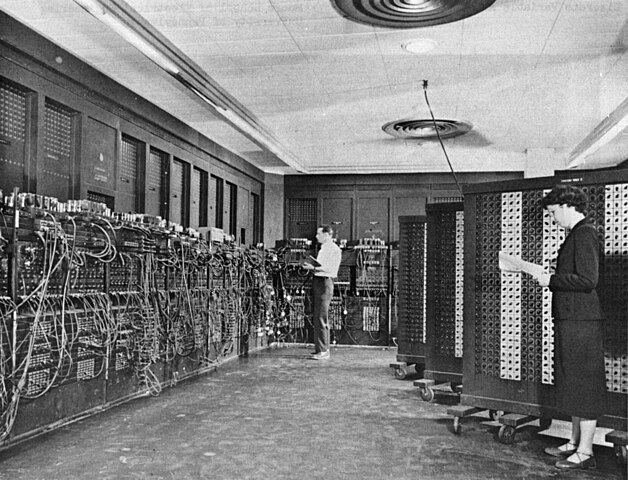

In the beginning, programming was complicated and done with wires (speaking of computers from the World War II era).

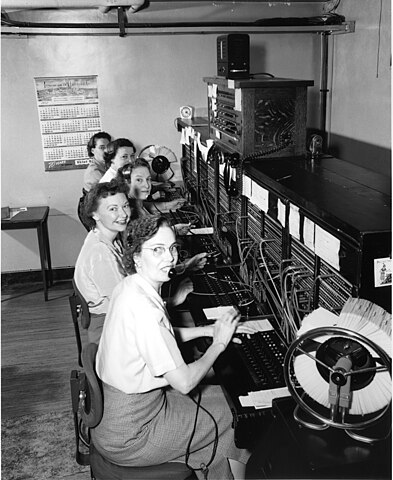

To change a program, it was necessary to reconfigure panels with various connections. These panels resemble those used to make telephone connections in the early 20th century.

Due to the great difficulty in reprogramming these computers, the advantage of making programming flexible and storing data and programs in memory was seen. Programs became a special type of data. Von Neumann Architecture

Computers and programs began to grow. In the beginning, programmers only programmed in machine code, instructions that were encoded for each processor.

| Machine Code | Architecture |

|---|---|

| 6689D86689C3 | Intel x86 |

| 89D889C3 | AMD x64 |

If you have never seen a program in machine code, you just saw two. The first line shows the code for the Intel x86 architecture (32 bits) and the following one for the AMD x64 architecture (64 bits). These programs do almost nothing, just moving data from one register to another.

Then came the idea of grouping similar instructions into mnemonics (abbreviations that help memorize the meaning), assembly language, or assembly language appeared:

0: 89 d8 mov eax, ebx

2: 89 c3 mov ebx, eax

See the previous program, for Intel x64 architecture, but this time, with the assembly instructions on the right. The mov instruction copies data from one place to another. In the first line, the value from the ebx register to the eax register.

An instruction in assembly can generate several different machine instructions:

mov register, memory

mov memory, register

They are the same assembly instruction (mov), but different in machine language, as the CPU needs to know precisely which instruction to execute. Depending on whether the mov is used with two registers or between a register and a memory address, it is already another instruction in machine code. What does this translation is a program called the assembler. Remember, the language is assembly (and varies from one processor to another, even having different notations) and the program that assembles (translates, compiles) from assembly to machine code is the assembler.

0: 8b 04 25 e8 03 00 00 mov eax,DWORD PTR ds:0x3e8

7: 89 1c 25 e8 03 00 00 mov DWORD PTR ds:0x3e8,ebx

This started to make things easier, but with more memory and more processing power, we needed more advanced ways of programming. The first high-level programming languages emerged: Fortran (1954, 1957 compiled) and then Algol (1958) which influenced languages we use today.

In Fortran, they decided to pass the task of translating the code we write to the machine. The idea was to write calculations in a way that a mathematician could be trained to code:

C AREA OF A TRIANGLE - HERON'S FORMULA

C INPUT - CARD READER UNIT 5, INTEGER INPUT

C OUTPUT -

C INTEGER VARIABLES START WITH I,J,K,L,M OR N

READ(5,501) IA,IB,IC

501 FORMAT(3I5)

IF (IA) 701, 777, 701

701 IF (IB) 702, 777, 702

702 IF (IC) 703, 777, 703

777 STOP 1

703 S = (IA + IB + IC) / 2.0

AREA = SQRT( S * (S - IA) * (S - IB) * (S - IC) )

WRITE(6,801) IA,IB,IC,AREA

801 FORMAT(4H A= ,I5,5H B= ,I5,5H C= ,I5,8H AREA= ,F10.2,

$13H SQUARE UNITS)

STOP

END

source: https://en.wikibooks.org/wiki/Fortran/Fortran_examples#Simple_Fortran_II_program

We were in 1957. Advancing rapidly, passing through Basic from 1964:

10 INPUT S$

20 LET T$=""

30 FOR I=LEN S$ TO 1 STEP -1

40 LET T$=T$+S$(I)

50 NEXT I

60 PRINT T$

In the example above, a program that reverses a string, written in Basic from the Sinclair ZX-81. https://rosettacode.org/wiki/Reverse_a_string#BASIC

In both the Fortran and Basic examples, note that each line of the program had an explicit number. This was important because it was common to use jump instructions, like GOTO, which changed the execution from one part of the program to another. The concept of blocks that we use today did not exist. In Go To Statement Considered Harmful by Edgar Dijkstra, arguments emerged for new languages to try to abolish or minimize the use of such constructions. These concepts had been emerging since 1959, but took shape with Dijkstra’s article in 1968. Since 1958, a committee formed by American and European scientists tried to create a language for programming ALGOL (Algorithm Language, born with the name Algebraic Language).

We reached Algol 68:

PROC reverse = (REF STRING s)VOID:

FOR i TO UPB s OVER 2 DO

CHAR c = s[i];

s[i] := s[UPB s - i + 1];

s[UPB s - i + 1] := c

OD;

main:

(

STRING text := "Was it a cat I saw";

reverse(text);

print((text, new line))

)

source: https://rosettacode.org/wiki/Category:ALGOL_68

Algol was undoubtedly the precursor of most imperative languages we use today. Code blocks and the cornerstone of structured programming.

Much closer to what we know today, absurdly close. Algol 68 influenced several languages, such as Pascal, C, and consequently Python.

A great advantage of high-level languages is to allow the creation of programs, regardless of the computer where they will be executed. The goal has always been to convert the program into machine code, and it continues to be so today. When we program today, we do not worry about machine code. We use a program to perform the conversion, either a compiler or an interpreter.

There are other branches, completely different from programming languages. We say different paradigms such as imperative (C, Basic, Fortran, Algol 68), functional (Lisp, Haskell, ML), or logical (Prolog).

Let’s see Lisp, also from the 60s/70s:

(defun count-change (amount coins

&optional

(length (1- (length coins)))

(cache (make-array (list (1+ amount) (length coins))

:initial-element nil)))

(cond ((< length 0) 0)

((< amount 0) 0)

((= amount 0) 1)

(t (or (aref cache amount length)

(setf (aref cache amount length)

(+ (count-change (- amount (first coins)) coins length cache)

(count-change amount (rest coins) (1- length) cache)))))))

; (compile 'count-change) ; for CLISP

(print (count-change 100 '(25 10 5 1))) ; = 242

(print (count-change 100000 '(100 50 25 10 5 1))) ; = 13398445413854501

(terpri)

source: https://rosettacode.org/wiki/Category:Common_Lisp

Here the idea of making the program closer to mathematics is stronger. Concepts like not being able to change the variable after the first assignment (immutability), recursion, and other features. But the compiler’s job was the same: to convert the program into machine code, which by nature is unstructured. Unstructured in the opposite sense of structured programming, as in machine language, the CPU uses addresses as line numbers and branch or jump instructions that implement the gotos.

All of this is equivalent, but with different presentations. Languages are like covers we use to hide the final code used by the computer. This idea works so well that we hardly remember it most of the time!

In the 80s and 90s, people began to have access to more computing power than they could use, of course, never too much. The thought of super performance in translating the program into machine code began to give way to languages that increased programmer productivity.

Still in the 80s and 90s, software became more expensive than hardware, and it is still so today. That is why languages like Perl, PHP, Python, among others gained so much market. They solved programmer productivity problems. Those who programmed in C++ switched to Perl and saw a new world. A report that was previously a C++ program of about 1000 lines became written with only 20 to 50 lines in Perl. This happened to me, right at the beginning of the commercial internet, around 95, 96. I was programming a billing system in C++ for Solaris, it ran very fast, but it was getting very large. The goal was not even performance, but to process large amounts of log files to generate billing reports. PERL and then PHP were magical.

C++ (reverse the string):

#include <iostream>

#include <string>

#include <algorithm>

int main()

{

std::string s;

std::getline(std::cin, s);

std::reverse(s.begin(), s.end()); // modifies s

std::cout << s << std::endl;

return 0;

}

source: https://rosettacode.org/wiki/Category:C%2B%2B

Perl:

$string = <STDIN>;

$flip = reverse $string;

print $flip;

Okay, in PERL, almost no one could read it later, but it was only 20 or 50 lines! In the worst case, it was faster to rewrite it. This gives an idea of why high performance was leaving priority during that time. Moreover, everyone was already used to having faster computers every year, and for faster, it was much faster than the previous ones. It was no longer worth polishing bits. What the bit polisher took a month to do, the script kiddie did in a day or two and with fewer bugs. This was one of the choices the programming community made in the 2000s. Things began to change again when a ceiling was reached in GHz processor speed. 4 or 5 GHz became a physical barrier. Increasing the clock of the next generation of processors became very difficult, as they generated so much heat at those frequencies that it prevented their use without proper cooling.

Each manufacturer then adopted various strategies to increase the processing speed of their processors. One of them was to improve processing efficiency per clock cycle. That is, to make an instruction that took 5 clock cycles take only 3. Another strategy was to increase the number of processors on the same chip; processors began to have multiple cores to compensate in some way for the lack of scalar speed increase. One of the problems that arose with multiple cores is that programming languages did not keep up with this movement. That is why we are still exploring new ways to program: asynchronous (which maximizes processor utilization during I/O input and output), concurrent (multiple programs being executed, sometimes one at a time, but quickly alternating between them, green threads, fibers) and parallel (which tries to execute several tasks at the same time).

Today, we are in another moment, where we pay for computing by the second (like in the 60s) in the case of cloud hosting (Function As A Service - FaaS - AWS Lambda). Performance becomes interesting again, and that is why modern languages try to solve the problem: how to generate efficient code for the processor without killing programmer productivity? The trend is to seek a balance between ease for the programmer and the performance of the generated program.

In places where this lack of performance is not critical, you can use Python with your eyes closed. For example, a web application server with fewer than 20 requests per second can easily run Django. On the other hand, a server with 2000 connections per second will have a lot of difficulty standing, both due to the long wait for results and the high memory usage. When performance becomes a problem, you need to combine Python with other languages. There are several ways to solve the scalability problem with Python, such as having multiple instances running and responding to requests. It is not a matter of being possible, but of being economically viable. The equation is cost to run (machines, infrastructure) x cost to develop (time, labor, quality).

But going back to the historical part of languages. In the late 60s, early 70s, when the first essays on Object Orientation emerged. The needs and applications of programs began to differentiate.

In the 70s, the idea of unstructured programming (traditional) shifted to structured programming with loops, without numbered lines of code, without goto, and this was the maxim that solved several problems (remember ALGOL). However, it did not work well for large programs or more complex problems. A recurring situation was to treat sets of similar data with the same functions, because in structured programming, functions are very important, being the basis of all program organization. Data and functions were completely separate entities.

A new way of organizing programs began to gain the market. In 67, Simula appeared:

Begin

Ref(TwinProcess) firstProc, secondProc;

Class TwinProcess(Name);

Text Name;

Begin

! Initial coroutine entry (creation)

Ref(TwinProcess) Twin;

OutText(Name); OutText(": Creation"); OutImage;

! First coroutine exit

Detach;

! Second coroutine entry

OutText(Name); OutText(": Second coroutine entry"); OutImage;

! Second coroutine exit: switch to the twin's coroutine

Resume(Twin);

! Last coroutine entry

OutText(Name); OutText(": Last coroutine entry"); OutImage;

Resume(Twin);

End;

Begin

firstProc :- New TwinProcess ("1st Proc");

secondProc :- New TwinProcess ("2nd Proc");

firstProc.Twin :- secondProc;

secondProc.Twin :- firstProc;

OutText("Starting"); OutImage;

Resume(firstProc);

OutText("End");

End;

End;

source: https://fr.wikipedia.org/wiki/Simula

Familiar? Classes, references (pointers) freed programmers from the stack because functions are limited in scope by the stack (stack). In the 70s and 80s, the heap was already widely used to avoid overloading the stack. But do you know what the stack and the heap are?

The stack is a well-known and widely used data structure. The great advantage of the stack is its ability to grow and shrink in groups. This characteristic makes the stack an ideal structure for performing context switching between functions. For example, when a function is called, the return address, as well as the function parameters, are placed on the processor’s stack. When the function returns, the returned value is placed on the stack (in a space that had already been reserved). It is the stack that makes each local variable in your program unique to each function call. This happens because local variables are allocated on the stack, and the function uses addresses relative to the top of the stack to find its local variables. Thus, when function A is called multiple times, each call has its own local variables, normally addressed by the offset or position on the stack, since with each call to A, the stack is displaced to accommodate the necessary space for the function’s variables.

Unlike the stack data structure, the processor’s stack is used as a base address for various operations, that is, the stack pointer marks the current top of the stack, and we access not only what is at the top but from the top. The stack has a limited size, nowadays something like one to two megabytes on Windows, but it can be increased. When we have a stack overflow error, it means we have exceeded the space allocated for the stack. This space can be fully utilized if we put too much data on the stack, creating, for example, giant arrays as local variables or when we recursively call the same function multiple times.

The heap is the solution found to manage free memory in the system, or the memory available for the process/program. The heap organizes free memory and fractions it into blocks as we request more space. It is the heap that organizes dynamically allocated memory. The heap is independent of the stack and can normally access huge amounts of memory. We use the heap in C when we call functions like malloc and free and in Python when we create new elements for a list. Heap management can be done by the operating system, but some languages have their own heap managers, allocating blocks of memory from the operating system but organizing and fractionating the use of these blocks internally.

I haven’t even gotten to what I wanted to talk about Object Orientation (OO). Returning to Simula 67 and the new style that consisted of passing a structure (struct or record) as a parameter to the function. This new way of organizing code creates a relationship between data structure and the program (code), and many argued that programs should organize data and code into blocks.

code + data 🡪 objects

In reality, the C++ language (1979) started as a mere translator for C (1969 ~ 1973), the original name was C with Classes (C with Classes, changing only in 1983 to C++). You wrote in C++ and it translated the classes into structures and the methods into functions that received the structure as the first parameter, and that is how objects are implemented to this day. Returning to the war of programming languages, the argument of the time was that BCPL (a language prior to C) was too low-level and that Simula was too slow.

But the theory did not stop there. Someone had the idea that in addition to the data, we could pass a table of methods (pointers to functions) and swap these methods to implement new behaviors. This is where concepts like inheritance, polymorphism, overloading, etc., come from.

The classic example of OO is a program that needs to work with geometric shapes, but it could be any code with common behavior. Imagine that you only have functions and cannot use objects. The program has to calculate the area of a triangle. You then create the function: area(a, h)

And the function is beautiful, small, fast, and clear.

Then someone asks to calculate the area of a rectangle. The interface is similar: area_rect(a, b)

But the formulas are different, and now you have two functions.

Another programmer makes a discovery: if I use a third variable to indicate whether it is a rectangle or a triangle, I can have a function area(type, a, b) that calculates the area, changing the formula according to the type.

area(type, a, b):

if type == "triangle":

return area(a,b)

if type == "rectangle":

return area_rect(a,b)

Okay, problem solved for now.

A week later, the program has to calculate areas of other geometric shapes, some with 2, others with 3 or 4 parameters. A big problem occurs: the area function interface no longer works, as it does not support 3 or 4 parameters!

Someone then thinks of organizing this differently (OO): I will create a structure where the shape type is a field, and the function used for the calculation is part of that structure and knows how to calculate the area.

Now you have a mechanism, with a unique interface that receives the structure and knows how to calculate its area, an object.

This makes the program very flexible, as the same program working with a unique interface can support more shapes. If new shapes need to be added, just re-specify a new structure and modify the way of calculating, but without modifying the interface or the code that already uses the previous shapes. The interface becomes shape.area() where shape is an object, derived from a class that contains the data and the program responsible for calculating the area.

And this was one of the leaps made in the way we write programs. You only see this type of problem when you have to change your program, when the program is maintained for some time and needs to be constantly modified. It is this type of problem that OO solves.

Another example, you can create a structure that associates several shapes: circles, triangles, and rectangles. Internally, it calculates the area of these shapes by summing the area of each one.

How to have such flexibility with simple functions? You can have structures (struct or records) that contain the type of shape and a pointer to the function that calculates the area. In languages like C, you can pass a structure and make a transformation or cast, asking the compiler: believe me, I know what I’m doing. This technique is used in the implementation of CPython.

Let’s see how it is in reality our PyObject:

typedef struct _object {

_PyObject_HEAD_EXTRA

Py_ssize_t ob_refcnt;

struct _typeobject *ob_type;

} PyObject;

It is just a structure with pointers to navigate the list of objects, the reference counter, and a pointer to the type of the object. Looking at the C code of CPython is very interesting. I recommend it to everyone studying C.

Soon, Object Orientation is just “syntax sugar,” just a simpler way to write complex programs. Now, if you do not understand where the need for OO came from, you will not understand much. Understanding how classes are implemented reveals very interesting details.

A class can be seen as a structure with a list of methods. When you create an object, another structure is created. It contains the class and a structure for the data; the two together are the object. The data is used by the methods listed in the class. When you use inheritance, you are manipulating this list of methods in different ways; some will originate from the superclass, others from the current class. It is very interesting to see how it is implemented; I really recommend taking a look behind the scenes.

Returning to Python, classes are dictionaries with methods associated with objects. This is one of the reasons Python is slow (or slower than compiled languages). When you compile a program in C++, the method that an object calls is practically set in stone (in the code generated by the compiler) or comes from a limited dynamic list. In Python, you can change the methods of each object because the object has a copy of the list of methods (dictionary) with its data. That is why we have self; remember the structure that was the first parameter in the conversion from C++ to C?

It is our self. In C++ and Java, it is called this, but it works the same way, being that in these languages the first parameter is hidden (I personally do not like Python’s self, but it is a choice that Guido made when implementing the language: explicit is better than implicit). That is why we do self.A = 1. We create everything in self and not in the class itself.

My point is that understanding a bit of history and even how compilers are built can give us a better idea of how things work. Why is it slow, why has such a problem not been solved, and so on. Languages continue to be ways of writing a program in a format that a compiler or interpreter can execute. Whether language A or B, once translated, it will execute in machine code; the compiler does the translation directly, and the interpreter does it at runtime.

But in Python, it is not like that… no. The interpreter does not call machine code; in reality, it executes our program line by line, calling functions in C that implement each command, function, etc. These calls run code at full speed, but this small pause between calls slows everything down. The interpreter can also call Python code. CPython, the standard interpreter we all use, is a program in C that reads your Python program as a text file and starts identifying the parts of your program.

As this is slow, to speed up interpretation (conversion of text to code), they created Python bytecode and the Python virtual machine, which is not machine code, with instructions still at a very high level to be executed directly by the processor. Thus, in Python, the program is translated into bytecode, and the program in C, in the case of CPython, executes this bytecode.

Now, your program is actually executed by another program written in C, but now we are talking about a program that executes another. That is why it is slower; that is why it cannot be compared to the performance of Rust or C or other compiled languages. The interpreter is an intermediary that inhibits optimizations in converting the program to machine code but has more direct and dynamic access to the program. In compiled languages, the generated code undergoes another round of optimizations, where operations are simplified and higher-performance instructions are used. The compiled program runs at the best speed of the machine because there are no intermediaries; the code is executed directly by the CPU.

Java does something cool with this idea of bytecode; they created the JVM (Java Virtual Machine) bytecode at a low level, almost like a real processor, but with a unique definition (similar to the SPARC processors that Sun produced at the time, requiring minimal conversions when the code ran on Sun machines). Java’s bytecode isolates the compiler from the various platforms that run the real machine. The entire Java world was built around it, allowing you to compile once and run anywhere, Java’s motto, because the compiler generates a binary using the JVM bytecode, which is translated by the Java Runtime when we execute the program. Soon after, Sun began to create JIT.

Just in Time Compilation (JIT) is nothing more than taking the Java bytecode (written in the virtual machine code, but very close to a real processor) and translating it into native machine code (which will run the program). That is why the VM “heats up,” meaning the code gets faster as it is called several times. In reality, over time, part of the bytecode is converted into native code, and on the second call, the program converted to machine code is executed directly, skipping the conversion steps.

Returning to Python, why is this not done? Because Python is a dynamic language. To have the characteristics of Python, such as being able to change methods, everything is an object (and each object can be different), you lose the ease of deterministically translating these programs into native code because the program can change. Many have tried to solve this problem. The best so far is Cython, which solved it by limiting what could be done, that is, creating a subset of the language easier to translate into C and then into machine code. The case of PyPy is the JIT for Python.

That is why languages like Go seem, but are not Python. They did a great job maintaining part of Python’s syntax, but in a set that can be compiled. One of the differences is sacrificing classes! They went back a level, sticking to structures, but instead of having code + data in an object, they have data (structs) with methods that can be added. The interface is no longer defined by the class type but by the capability or by the implementation of such a struct having an interface that implements a method.

type rect struct {

width, height int

}

func (r *rect) area() int {

return r.width * r.height

}

In Python, the object x with a method A would be called as x.A().

Being x an object of a class C, for the method A to exist, C or one of its superclasses implements A. The interface between classes is maintained as long as the objects come from the same origin (superclass), in this case, a class hierarchy.

This would be simple, but we have Python’s duck typing: if class C implements A and class D also does, x can be either an object of C or of D, x.A() is valid for both a C object and a D object, that is, what matters is that the object has the implementation of method A, it does not matter if it comes from C or D; and even if classes C and D have some hierarchical relationship between them.

In Go and Rust, C would be one structure, D another. There is no inheritance. Then we can create a method A that works with structures C and another method A that works with structures D. Remember that the first parameter was the object? In Rust and Go, the first parameter has the type of the structure for which you are implementing the method. This is resolved at compile time and does not hinder the speed of code execution, but it is subtle. It is the polymorphism of OO in action, but without being OO. In Go and Rust, you can also declare an interface that implements method A, and create a function that works with objects or structures that implement the method A interface, thus being able to write generic code without using OO or forcing a relationship of inheritance between the various implementations. Python uses Protocols to achieve the same goal.

So, what is the importance of theory? For things to advance, we need new names for each concept. I need to trust that when I say class, you have a precise idea of the concept I am communicating. Without theory, you cannot understand the texts, and that is why many people complain that it is so difficult, but in reality, it is a lack of theoretical knowledge. We haven’t even talked about concurrency and other subjects; that will be for another day. But theory is important, and practice is also important; we need both, and there is room for structured programming, OO, functional, logical, etc.

Extra Links#

- Object Orientation with Python: Chapter 10

- Example of Object Orientation with drawing: Chapter 13

- C Classes: a quick introduction to the C language for those who already program in Python.

Credits#

[1] By Unknown author - U.S. Army Photo, Public Domain, https://commons.wikimedia.org/w/index.php?curid=55124

[2] By Seattle Municipal Archives from Seattle, WA - Seattle Municipal Archives, CC BY 2.0, https://commons.wikimedia.org/w/index.php?curid=5288715